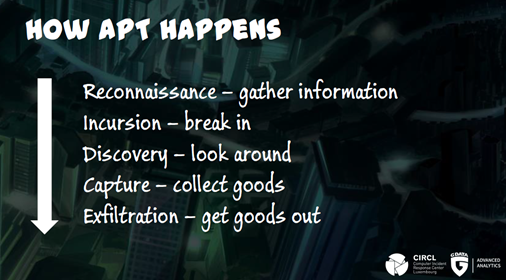

IoC lists and their shortcomings

At the end of an analysis on most vendor pages there is a list of Indicators of Compromise (IoCs).

Most of times, those consist of data points such as IP addresses which are contacted by the analyzed piece of malware. Should the firewall logs show a connection to one of those IP addresses, it is mostly safe to say that something is not right in an organization's network. In addition to this, those analyses also list checksums of files and processes associated with the threat. This information helps the security team to locate those in time.

The advantage of these data points is that they are easy to create and base a response on. The drawback of those IoCs is that some of the datapoints are relatively volatile. It takes very little effort on an attacker's part to render an IoC list useless, e.g. by changing the IP addresses or introducing minor changes in a binary file. The latter then leads to the entire file having a different checksum and the security team is non the wiser, despite having an extensive IoC list.

Hard (and expensive) evidence data

It's because of the volatility of some of the classic IoCs like checksums and domain lists that other data points need to be established to mount an effective defense strategy. To this end Marschalek and Vinot are developing concepts which look deeper into malicious files to find evidence which is not as easily altered and allow a reliable detection and attribution.

This is, however, where the crux lies: attackers know fairly well how analysts work and they go to great lenghts to disguise features and functions of their malware. Things like code obfuscation and sandbox evasion have been part and parcel of many types of malware for a long time which makes it more difficult for analysts to automate sample processing. Every now and then, manual work is required. It is this work which makes the generation of data from the files quite expensive by comparison.

On the other hand, the features extracted from files in this manner allows for a much more reliable and consistent detection and attribution of a malicious file than 'softer', more volatile IoCs which are subject to change at any time.

More information

If you are curious about what challenges await researchers, head over to the blog of G DATA Advanced Analytics and read the full article there.